Docker Basics

Prerequisites¶

- VirtualBox or VMware Workstation Pro Installed.

- Virtual Machine

[project-x-corp-svr]installed and configured.

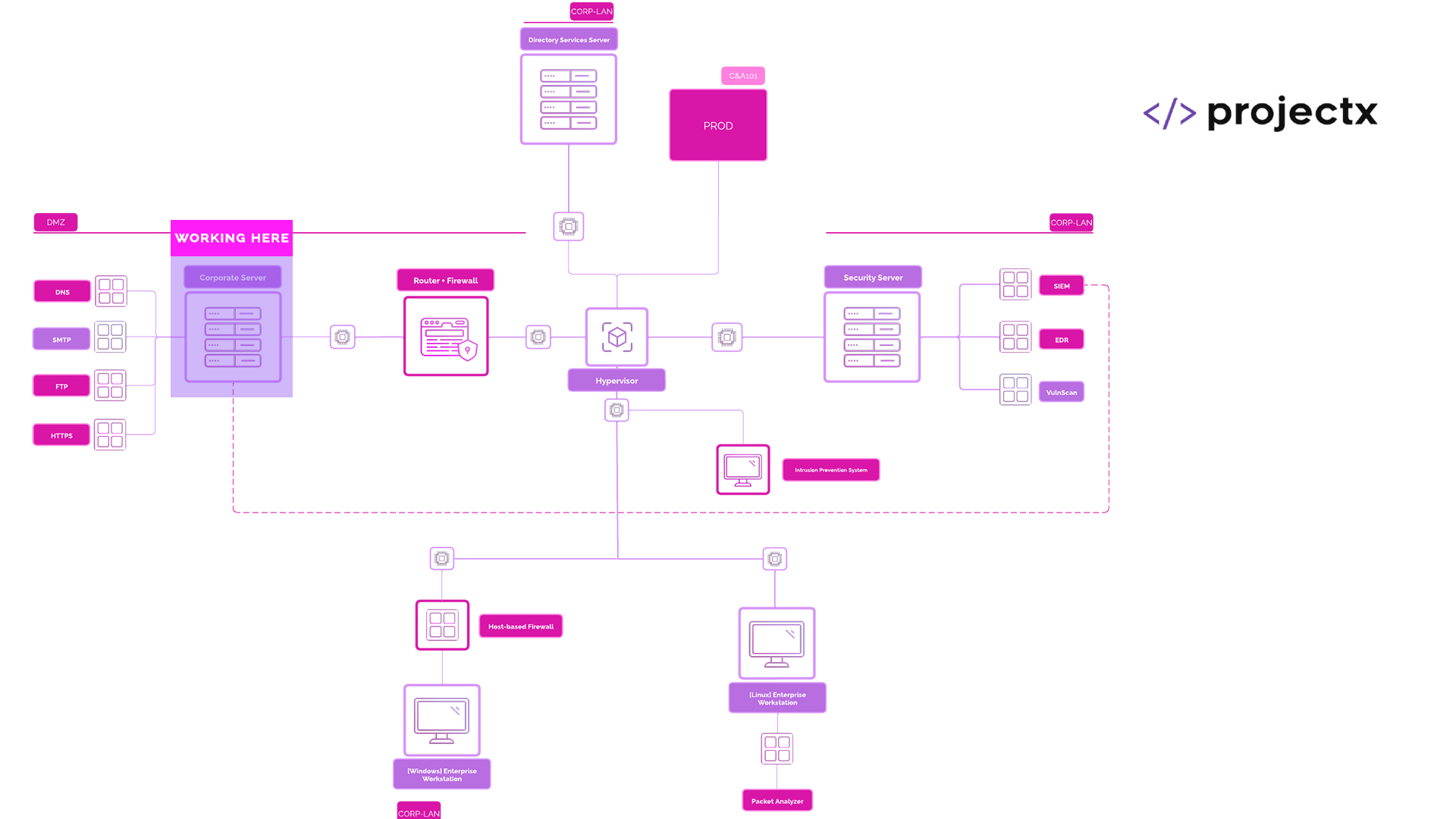

Network Topology¶

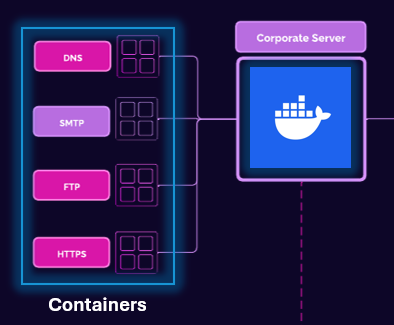

Containers¶

Containers are a lightweight, portable, and self-sufficient way to package software. They bundle the application code together with its dependencies, libraries, and configuration files, ensuring consistent execution across different environments.

^ That's a good ole' ChatGPT or Google definition.

What does that actually mean?

Containers are a bit confusing, but once you understand them, you will start to realize the benefits.

Right now we have been interfacing with Virtual Machines (VM), using our Type 2 Hypervisor as a means of deploying and configuring each of the VMs. Each VM comes with an entire Operating System.

This means each time we want to provision a new application that lives on top of the VM, we have to make sure the Operating System is configured correctly.

Chances are... Throughout Enterprise 101, you encountered an issue with your VM. Maybe it didn't boot. You couldn't get the screen to be set to full scale, or your host machine is slow while working with VMs. This is one of the major drawbacks of deploying and maintaining your own self-hosted infrastructure (cloud labs coming soon?).

Containers are another abstraction from your underlying host machine.

The best way to think about containers is "Operating System Virtualization". Meaning instead of deploying and managing an entire operating system stack, you can isolate separate applications into containers.

Throughout NA101, we are going to be provisioning various different applications (or servers). Instead of having to clone, reconfigure, and deploy a VM for each of our applications, we will just use one virtual machine, [project-x-corp-svr], which will then host containers that will host our stand alone applications.

In our case, the containers will be used to simulate "servers". So, we will have a DNS container, running a DNS server (BIND9), a HTTPS web server hosting our ProjectX Internal Portal page (NGINX), and FTP server serving some files. Each of these services or servers will be compartmentalized into containers.

Docker¶

In Enterprise 101, we deployed a single SMTP server on [project-x-corp-svr]. We installed the Docker engine to host this single SMTP container.

Docker (and the Docker Engine) is one (and the most popular) technology used to create and abstract the operating system to build containers.

Docker is quite awesome.

There are a few basic primitives or concepts to be familiar with when it comes to Docker.

Docker Images¶

A Docker Image is a standalone, lightweight application that contains everything needed to run a container. This could include the actual code, the third party libraries, environment variables (such as passing in your API Key), and configuration files.

The best way to think of a Docker Image is like a blueprint of instructions. This blueprint provides everything necessary to run the application.

Once we gather all the necessary information for this blueprint, we can compile this into an image.

We create docker images using the docker build command.

docker build -t projectx-image-dns .

Here we are building an image called projectx-image-dns.

Where do we write and source these instructions?

Through a Dockerfile.

Notice how we have this lonely looking . at the end of our command from above. This . references the Dockerfile.

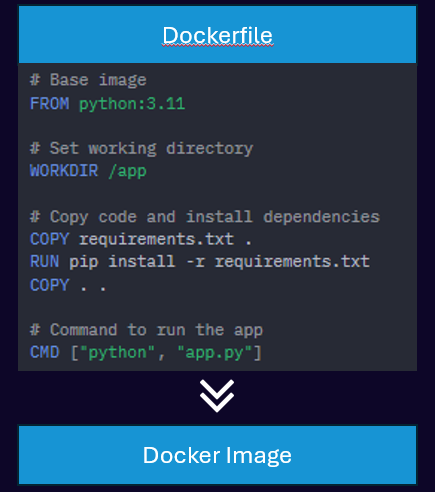

Dockerfile¶

The Dockerfile is the actual blueprint, the list of instructions provided to Docker. Docker will look for a file called, Dockerfile and perform the instructions line-by-line. You can think of this like interfacing with Linux. Each time you perform a cd Enter, then an ls Enter.

Let's take a look at a sample Dockerfile.

# Base image

FROM python:3.11

# Set working directory

WORKDIR /app

# Copy code and install dependencies

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

# Command to run the app

CMD ["python", "app.py"]

This Dockerfile performs the following:

-

FROM python:3.11: Use a pre-defined Docker image. Pre-defined Docker images come with a set of dependencies already installed. -

COPY requirements.txt .: Here we are copying a file,requirements.txtfrom our host machine into the image. -

COPY . .: Copy everything from the host machine into the image. This is where we could provide additional files such asindex.htmlto showcase a web page. -

RUN pip install -r requirements.txt: We usepipto install the requirements. -

WORKDIR /app: Create a new folder, call it/appand set it as the working directory (similar to how you wouldcdinto your directory). -

CMD ["python", "app.py"]: Using the command line (CMD), execute the application. This would be similar topython3 app.py, when we run our Python application.

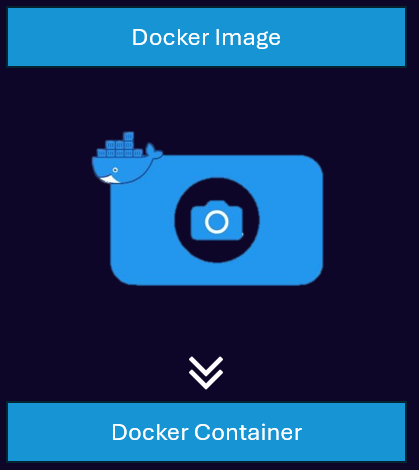

Docker Containers¶

Docker containers are the running instances of the image.

We deploy containers using the docker run command.

For example:

docker run --name my-container my-image.

Here, we are providing a name for our container my-container. The blueprint containing the dependencies, variables, and install instructions are sourced from the Docker Image, in this case my-image.

A Docker container becomes a container when it goes from Image ---> Container.

Docker Repository (DockerHub)¶

Remember this from above FROM python:3.11?

These are Docker Images too. Most of the time we build Docker Images off of pre-existing Docker Images. These pre-existing Docker Images contain the runtime environments, such as Ubuntu, Alpine Linux, and more.

Instead of having to write an entire Docker Image our baseline runtime (or operating system) environment, we can source from pre-existing ones.

We pull these pre-existing Docker Images through a repository, a centralized location where we can upload, share, and pull other Docker Images.

Docker has built their own repository called DockerHub to just this.

By default, when you add some like FROM, so FROM python:3.11, Docker is reaching out to DockerHub's official repository that has this Docker Image.

To-Do: Add docker Group to sudo¶

Open [project-x-corp-svr], navigate to a new terminal session.

Create a new docker group.

newgrp docker

Add the docker group to the user's sudo group.

sudo usermod -aG docker $USER

This will allow us to not have to type sudo docker every single time we want to interface with docker.

A Few Additional Commands¶

Here are a few additional (and helpful) commands we will use while we deploy and manage our containers.

docker ps: Show a list of running containers.docker ps -a: Show a list of all containers, both running and stopped.docker images: Show a list of all images. Every time we run thedocker build ..., we are creating a new image.docker exec -it my-container-name /bin/bash: This is how we can log into the container (exec -it). We can use the/bin/bashshell.docker logs my-container: Show the logs of a running (or stopped) container.